Adjusting meta-analyses for the presence of publication bias

Sladekova, M., Webb, L. E. A., Field, A. P.

Stage: Project completed. Published in Psychological Methods. Open Access (accepted manuscript) version can be found on Sussex SRO.

Science is riddled with bias of all shapes and sizes. One of the most prevalent problems that affects the availability of scientific findings is publication bias. That is, studies reporting findings with statistically significant results are more likely to get published compare to those with statistically non-significant, but equally valid results. This means that a sizable chunk of research findings ends up unpublished in the researchers’ file drawers (at least in the traditional publication model).

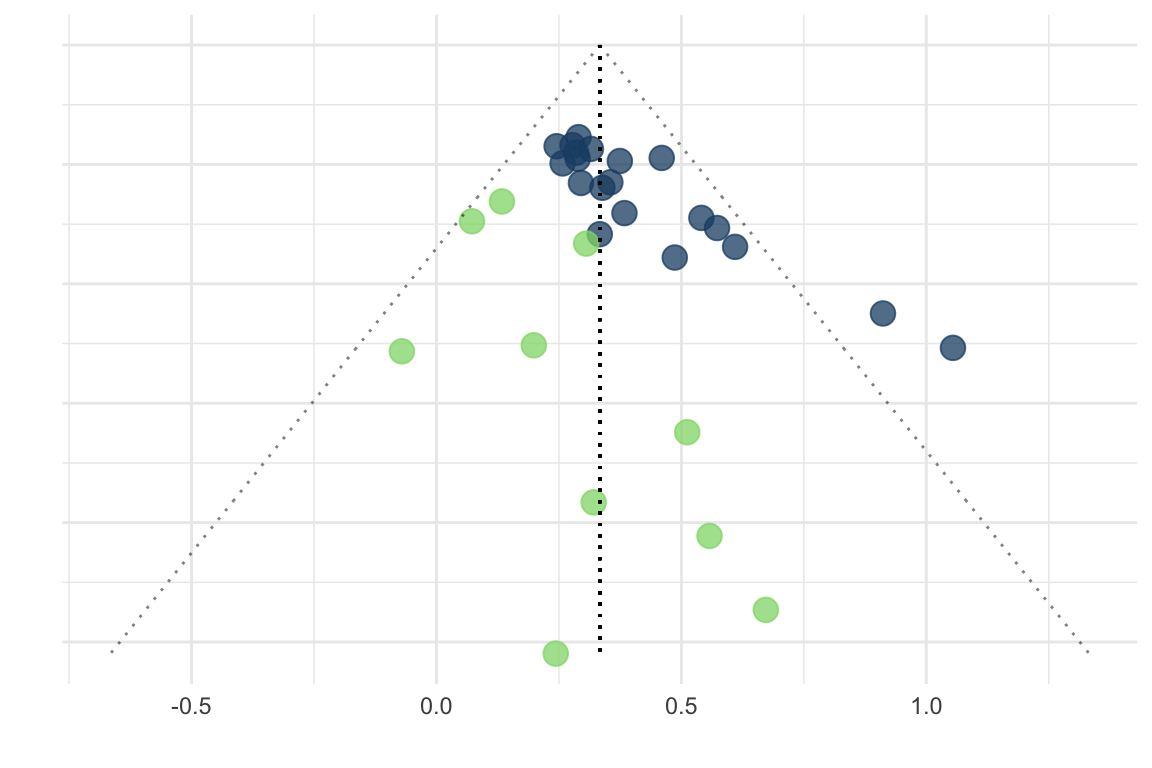

This creates a problem if we want to synthesise findings across mutliple research papers using meta-analysis - the effects that end up being published are likely to overestimate the true effect. A number of statistical methods have been developed in an attempt to address - these methods will adjust the meta-analytic estimate in a manner that’s supposed to account for publication bias.

Some of the newer methods include Trim-and-Fill, Precision-Effect Test, Precision-Effect Estimate with Standard Errors, Weighted Average of Adequately Powered Studies, p-curve, p-uniform, and Selection Models. All of these methods have different strengths and weaknesses, and they will produce adjusted estimates with varying accuracy depending on the situation they are used in. This project examined how the estimates in published meta-analyses change after they are adjusted for the presence of publication bias with one of these methods.

We collected a sample of over 400 meta-analyses from 90 published papers. The adjustment method for each meta-analysis was selected depending on the characteristics of the meta-analytic sample (like sample size, or heterogeneity of primary effects) - this means that instead selecting a single method and applying it to all studies without discrimination, we instead allocated an adjustment method to each meta-analysis based on how the method was likely to perform in given conditions. We compared how the estimates changed across the different methods, but also how they changed when we made different different assumptions about the level of publication bias and the population effect size.

Data and materials: https://osf.io/k9hqm/

Preregistration: https://osf.io/kxjs3

Since its publication, the dataset has contributed to two follow-up studies looking into publication bias using Bayesian methods:

Bartoš, F., Maier, M., Shanks, D., Stanley, T. D., Sladekova, M., & Wagenmakers, E. J. (2023). Meta-Analyses in Psychology Often Overestimate Evidence for and Size of Effects. https://psyarxiv.com/tkmpc/

Bartoš, F., Maier, M., Wagenmakers, E. J., Nippold, F., Doucouliagos, H., Ioannidis, J., Otte, W. M., Sladekova M., Fannelli, D. & Stanley, T. D. (2023). Footprint of publication selection bias on meta-analyses in medicine, economics, and psychology. https://arxiv.org/abs/2208.12334